| Opinionated Analysis Development |

| Question Addressed |

|---|

| Can you re-run the analysis and get the same results? |

| If an external library you’re using is updated, can you still reproduce your original results? |

| If you change code, do you know which downstream code need to be re-executed? |

| If the data or code change but the analysis is not re-executed, will your analysis reflect that it is out-of-date? |

| Can you re-run your analysis with new data and compare it to previous results? |

| Can you surface the code changes that resulted in a different analysis results? |

| Can a second analyst easily understand your code? |

| Can you re-use logic in different parts of the analysis? |

| If you decide to change logic, can you change it in just one place? |

| If your code is not performing as expected, will you know? |

| If your data are corrupted, do you notice? |

| If you make a mistake in your code, will someone notice it? |

| If you are not using efficient code, will you be able to identify it? |

| Can a second analyst easily contribute code to the analysis? |

| If two analysts are developing code simultaneously, can they easily combine them? |

| Can you easily track next steps in your analysis? |

| Can your collaborators make requests outside of meetings or email? |

| Parker, Hilary. n.d. “Opinionated Analysis Development.” https://doi.org/10.7287/peerj.preprints.3210v1. |

2 Embrace Your Fallibility

2.1 Embrace Your Fallibility

2.1.1 Why are we here?

The unifying interest of data scientists and statisticians is that we want to learn about the world using data.

Working with data has always been tough. It has always been difficult to create analyses that are

- Accurate

- Reproducible and auditable

- Collaborative

Working with data has gotten tougher with time. Data sources, methods, and tools have become more sophisticated. This leaves a lot of us stressed out because errors and mistakes feel inevitable and are embarrassing.

2.1.2 What are we going to do?

Errors and mistakes are inevitable. It’s time to embrace our fallibility.

In The Field Guide to Understanding Human Error (Dekker 2014), the author argues that there are two paradigms:

- Old-World View: errors are the fault of individuals

- New-World View: errors are the fault of flawed systems that fail individuals

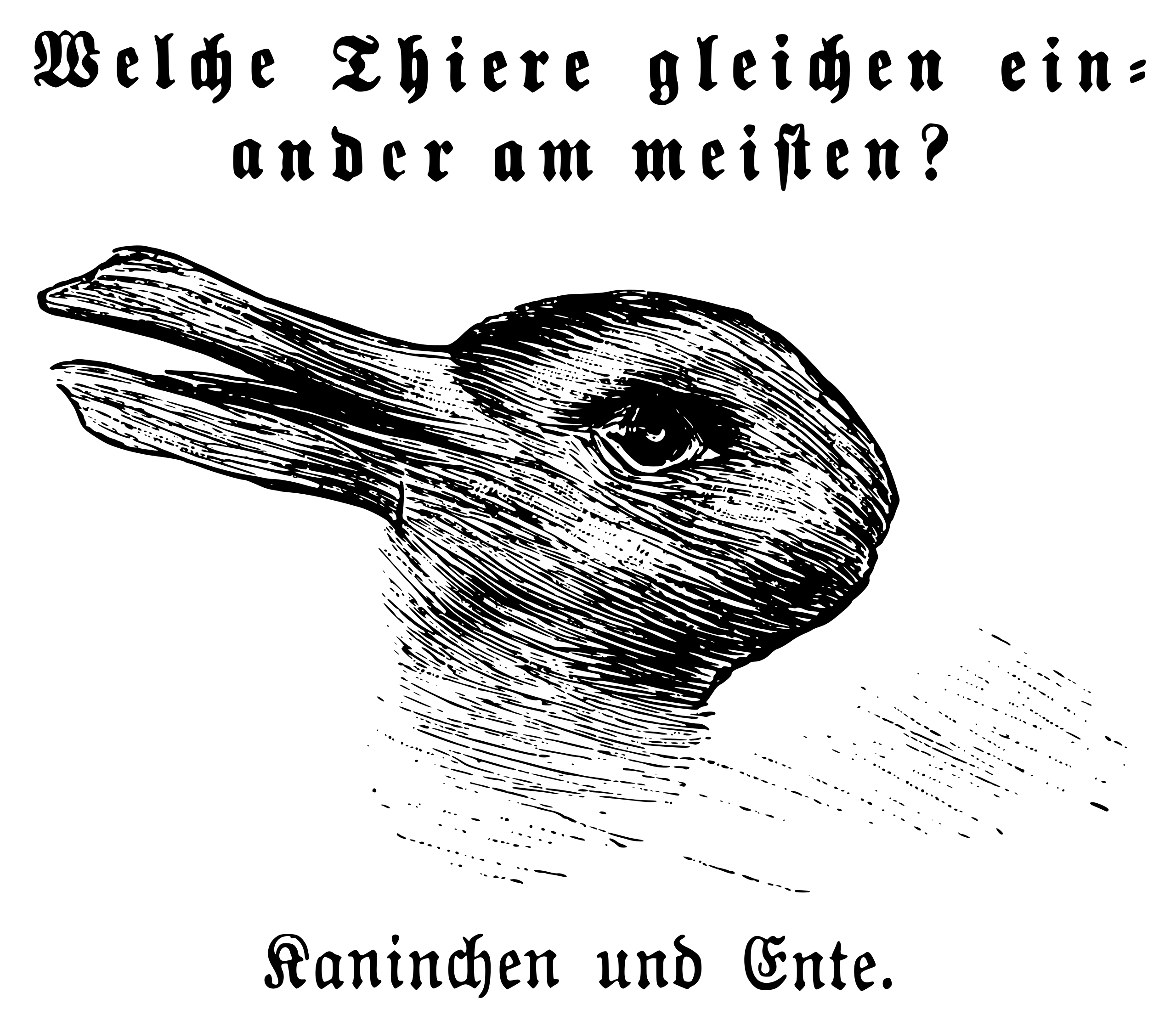

Errors and mistakes are inevitable. This is our gestalt moment like the famous duck-rabbit illusion in Figure 2.1.

Looking at the demands of research and data analysis, we must no longer see grit as sufficient. Instead, we need to see and use systems that don’t fail us as statisticians and data scientists.

2.1.3 How are we going to do this?

Errors and mistakes are inevitable. We want to adopt evidence-based best practices that minimize the probability of making an error and maximize the probability of catching an inevitable error.

(Parker, n.d.) describes a process called opinionated analysis development.

We’re going to adopt the approaches outlined in Opinionated Analysis Development and then actually implement them using modern data science tools.

Through years of applied data analysis, I’ve found these tools to be essential for creating analyses that are

- Accurate

- Reproducible and auditable

- Collaborative

2.2 Why is Modern Data Analysis Difficult to do Well?

Working with data has gotten tougher with time.

- Data are larger on average. For example, The Billion Prices Project scraped prices from all around the world to build inflation indices (Cavallo and Rigobon 2016).

- Complex data collection efforts are more common. For example, (Chetty and Friedman 2019) have gained access to massive administrative data sets and used formal privacy to understand inter-generational mobility.

- Open source packages that provide incredible functionality for free change over time.

- Papers like “The garden of forking paths: Why multiple comparisons can be a problem, even when there is no ‘fishing expedition’ or ‘p-hacking’ (Gelman and Loken 2013) and the research hypothesis was posited ahead of time∗” and “Why Most Published Research Findings Are False” (Ioannidis 2005) have motivated huge increases in transparency including focuses on pre-registration and computational reproducibility.

There is a growing realization that statistically significant claims in scientific publications are routinely mistaken. A dataset can be analyzed in so many different ways (with the choices being not just what statistical test to perform but also decisions on what data to exclude or exclude, what measures to study, what interactions to consider, etc.), that very little information is provided by the statement that a study came up with a p < .05 result. The short version is that it’s easy to find a p < .05 comparison even if nothing is going on, if you look hard enough—and good scientists are skilled at looking hard enough and subsequently coming up with good stories (plausible even to themselves, as well as to their colleagues and peer reviewers) to back up any statistically-significant comparisons they happen to come up with. ~ Gelman and Loken

From this perspective, what we need is Truman Show for every researcher where we can watch their decisionmaking and the nuances of the decisions they make. That’s impractical! But from the point of good science, pulling back the curtain with computational reproducibility is a way to mitigate these concerns.

Even for simple analysis, we can ask ourselves an entire set of questions at the end of the analysis. Table 2.1 lists a few of these questions.

2.3 What are we going to do?

Replication is the recreation of findings across repeated studies. It is a cornerstone of science.

Reproducibility is the ability to access data, source code, tools, and documentation and recreate all calculations, visualizations, and artifacts of an analysis.

Computational reproducibility should be the minimum standard for computational social sciences and statistical programming.

We are going to center reproducibility in the practices we maintain, the tools we use, and culture we foster. By centering reproducibility, we will be able to create analyses that are

- Accurate

- Reproducible and auditable

- Collaborative

Table 2.2 groups these questions in analysis features and suggests opinionated approaches to each question.

| Opinionated Analysis Development | |

| Opinionated Approach | Question Addressed |

|---|---|

| Reproducible and Auditable | |

| Executable analysis scripts | Can you re-run the analysis and get the same results? |

| Defined dependencies | If an external library you’re using is updated, can you still reproduce your original results? |

| Watchers for changed code and data | If you change code, do you know which downstream code need to be re-executed? |

| Watchers for changed code and data | If the data or code change but the analysis is not re-executed, will your analysis reflect that it is out-of-date? |

| Version control (individual) | Can you re-run your analysis with new data and compare it to previous results? |

| Version control (individual) | Can you surface the code changes that resulted in a different analysis results? |

| Literate programming1 | Can a second analyst easily understand your code? |

| Accurate Code | |

| Modular, tested, code | Can you re-use logic in different parts of the analysis? |

| Modular, tested, code | If you decide to change logic, can you change it in just one place? |

| Modular, tested, code | If your code is not performing as expected, will you know? |

| Assertive testing of data, assumptions, and results | If your data are corrupted, do you notice? |

| Code review | If you make a mistake in your code, will someone notice it? |

| Code review | If you are not using efficient code, will you be able to identify it? |

| Collaborative | |

| Version control (collaborative) | Can a second analyst easily contribute code to the analysis? |

| Version control (collaborative) | If two analysts are developing code simultaneously, can they easily combine them? |

| Version control (collaborative) | Can you easily track next steps in your analysis? |

| Version control (collaborative) | Can your collaborators make requests outside of meetings or email? |

| Source: Parker, Hilary. n.d. “Opinionated Analysis Development.” https://doi.org/10.7287/peerj.preprints.3210v1. | |

| 1 This was originally 'Code Review' | |

2.4 How are we going to do this?

Table 2.3 lists specific tools we can use to adopt each opinionated approach.

| Opinionated Analysis Development | ||

| Opinionated Approach | Question Addressed | Tool1 |

|---|---|---|

| Reproducible and Auditable | ||

| Executable analysis scripts | Can you re-run the analysis and get the same results? | Code first |

| Defined dependencies | If an external library you’re using is updated, can you still reproduce your original results? | library(renv) |

| Watchers for changed code and data | If you change code, do you know which downstream code need to be re-executed? | library(targets)2 |

| Watchers for changed code and data | If the data or code change but the analysis is not re-executed, will your analysis reflect that it is out-of-date? | library(targets)2 |

| Version control (individual) | Can you re-run your analysis with new data and compare it to previous results? | Git |

| Version control (individual) | Can you surface the code changes that resulted in a different analysis results? | Git |

| Literate programming3 | Can a second analyst easily understand your code? | Quarto |

| Accurate Code | ||

| Modular, tested, code | Can you re-use logic in different parts of the analysis? | Well-tested functions |

| Modular, tested, code | If you decide to change logic, can you change it in just one place? | Well-tested functions |

| Modular, tested, code | If your code is not performing as expected, will you know? | Well-tested functions |

| Assertive testing of data, assumptions, and results | If your data are corrupted, do you notice? | library(assertr) |

| Code review | If you make a mistake in your code, will someone notice it? | GitHub |

| Code review | If you are not using efficient code, will you be able to identify it? | library(microbenchmark)2 |

| Collaborative | ||

| Version control (collaborative) | Can a second analyst easily contribute code to the analysis? | GitHub |

| Version control (collaborative) | If two analysts are developing code simultaneously, can they easily combine them? | GitHub |

| Version control (collaborative) | Can you easily track next steps in your analysis? | GitHub |

| Version control (collaborative) | Can your collaborators make requests outside of meetings or email? | GitHub |

| Source: Parker, Hilary. n.d. “Opinionated Analysis Development.” https://doi.org/10.7287/peerj.preprints.3210v1. | ||

| 1 Added by Aaron R. Williams | ||

| 2 We will not spend much time on these topics | ||

| 3 This was originally 'Code Review' | ||

2.4.1 Bonus stuff!

Adopting these opinionated approaches and tools promotes reproducible research. Adopting these opinionated approaches and tools also provides a bunch of great bonuses.

- Reproducible analyses are easy to scale. Using tools we will cover, we created almost 4,000 county- and city-level websites.

- GitHub offers free web hosting for hosting books and web pages like the notes we’re viewing right now.

- Quarto makes it absurdly easy to build beautiful websites and PDFs.

2.5 Roadmap

The day roughly follows the process for setting up a reproducible data analysis.

- Project organization will cover how to organize all of the files of a data analysis so they are clear and so they work well with other tools.

- Literate programming will cover Quarto, which will allow us to combine narrative text, code, and the output of code into clear artifacts of our data analysis.

- Version control will cover Git and GitHub. These will allow us to organize the process of reviewing and merging code.

- In programming, we’ll discuss best practices for writing code for data analysis like writing modular, well-tested functions and assertive testing of data, assumptions, and results.

- Environment management will cover

library(renv)and the process of managing package dependencies while using open-source code. - If we have time, we can discuss how a positive climate and ethical practices can improve transparency and strengthen science.

We sort Table 2.3 into Table 2.4, which outlines the structure of the rest of the day.

| Opinionated Analysis Development | |||

| Opinionated Approach | Question Addressed | Tool1 | Section1 |

|---|---|---|---|

| Executable analysis scripts | Can you re-run the analysis and get the same results? | Code first | Project Organization |

| Literate programming | Can a second analyst easily understand your code? | Quarto | Literate Programming |

| Version control (individual) | Can you re-run your analysis with new data and compare it to previous results? | Git | Version Control |

| Version control (individual) | Can you surface the code changes that resulted in a different analysis results? | Git | Version Control |

| Code review | If you make a mistake in your code, will someone notice it? | GitHub | Version Control |

| Version control (collaborative) | Can a second analyst easily contribute code to the analysis? | GitHub | Version Control |

| Version control (collaborative)2 | If two analysts are developing code simultaneously, can they easily combine them? | GitHub | Version Control |

| Version control (collaborative) | Can you easily track next steps in your analysis? | GitHub | Version Control |

| Version control (collaborative) | Can your collaborators make requests outside of meetings or email? | GitHub | Version Control |

| Modular, tested, code | Can you re-use logic in different parts of the analysis? | Well-tested functions | Programming |

| Modular, tested, code | If you decide to change logic, can you change it in just one place? | Well-tested functions | Programming |

| Modular, tested, code | If your code is not performing as expected, will you know? | Well-tested functions | Programming |

| Assertive testing of data, assumptions, and results | If your data are corrupted, do you notice? | library(assertr) | Programming |

| Defined dependencies | If an external library you’re using is updated, can you still reproduce your original results? | library(renv) | Environment Management |

| Source: Parker, Hilary. n.d. “Opinionated Analysis Development.” https://doi.org/10.7287/peerj.preprints.3210v1. | |||

| 1 Added by Aaron R. Williams | |||

| 2 This was originally 'Code Review' | |||